Right-Sizing Your Private AI: A Guide to Choosing the Perfect On-Premise Appliance

You’ve made the strategic decision to bring your AI in-house. You’re ready for the ironclad security, predictable costs, and deep customization that an on-premise Large Language Model (LLM) offers.

Now comes the practical question: “Which machine is right for my business?“

Choosing an AI server isn’t like buying a standard computer. The most important factors are a unique set of metrics that determine how the AI will actually perform for your team. Getting this right means investing in a tool that feels powerful and seamless; getting it wrong can lead to frustration and under-utilization.

Let’s break down the four key pillars you need to consider: Users, Model Size, Performance, and Budget.

Number of Users: The Concurrency Question

This is the most important starting point: how many people will use the AI at the same time? This is called concurrency.

- 1-5 Concurrent Users (The Small Team): A single-lane road is fine. For a small team or a few individuals running intensive tasks, a powerful but singular machine works wonderfully.

- 5-50 Concurrent Users (The Department): You need a multi-lane highway. The system must handle traffic from multiple departments simultaneously without creating jams.

- 50+ Concurrent Users (The Entire Organization): You need a superhighway. The appliance must be an enterprise-grade workhorse, capable of managing a constant flow of requests.

The takeaway: An honest assessment of your team’s likely concurrent usage is the first step to right-sizing your hardware.

Model Size: Choosing the Right “Brain”

The “size” of an LLM is measured in parameters (e.g., 7B for 7 billion, 70B for 70 billion). Think of this as the engine size of your AI. A bigger engine is more powerful and capable of more complex reasoning, but it also requires more “fuel” in the form of GPU memory (VRAM).

- Small Models (7B – 13B): These are fast, efficient, and incredibly capable. They are perfect for tasks like summarization, drafting emails, and answering straightforward questions. Think of them as a responsive, turbocharged 4-cylinder engine.

- Large Models (70B+): This is the industry sweet spot for high-level performance. These models exhibit much more nuance, follow complex multi-step instructions better, and possess a deeper reasoning ability. This is the V8 engine you need for sophisticated legal analysis or complex strategic work.

- Gigantic Models (500B+): This is the frontier of AI. These models, often “Mixture-of-Experts” (MoE), offer state-of-the-art performance, tackling problems with a level of nuance that approaches human expertise. They are reserved for the most demanding applications, like powering a commercial AI product or conducting advanced R&D.

- The Hardware Connection (VRAM): You need enough VRAM to fit the model. A machine with 24GB of VRAM is excellent for Small models. To run a Large 70B model efficiently, you’ll want a server with 48GB of VRAM or more. Running Gigantic models requires a massive amount of VRAM (often 200GB+), which is the domain of our Powerhouse tier servers.

The takeaway: Match the model’s “brainpower” to the complexity of your tasks. More complex work demands a larger model, which in turn demands more GPU VRAM. This explanation is simplified compared to the reality, but it gives a great estimation of what your company might need.

Performance: What “Tokens per Second” Means for You

Speed is measured in tokens per second (T/s). A “token” is a piece of a word (roughly ¾ of a word), so T/s is the speed at which the AI “writes” its response. This metric directly translates to user experience.

- Low Performance (< 30 T/s): Feels like watching someone type slowly. Acceptable for background tasks, but frustrating for real-time chat.

- Interactive Performance (30-100 T/s): This is the sweet spot. The response feels conversational and fluid, perfect for chatbots and coding assistants.

- High Performance (100+ T/s): Feels nearly instantaneous. Ideal for high-throughput applications or power users generating very long responses.

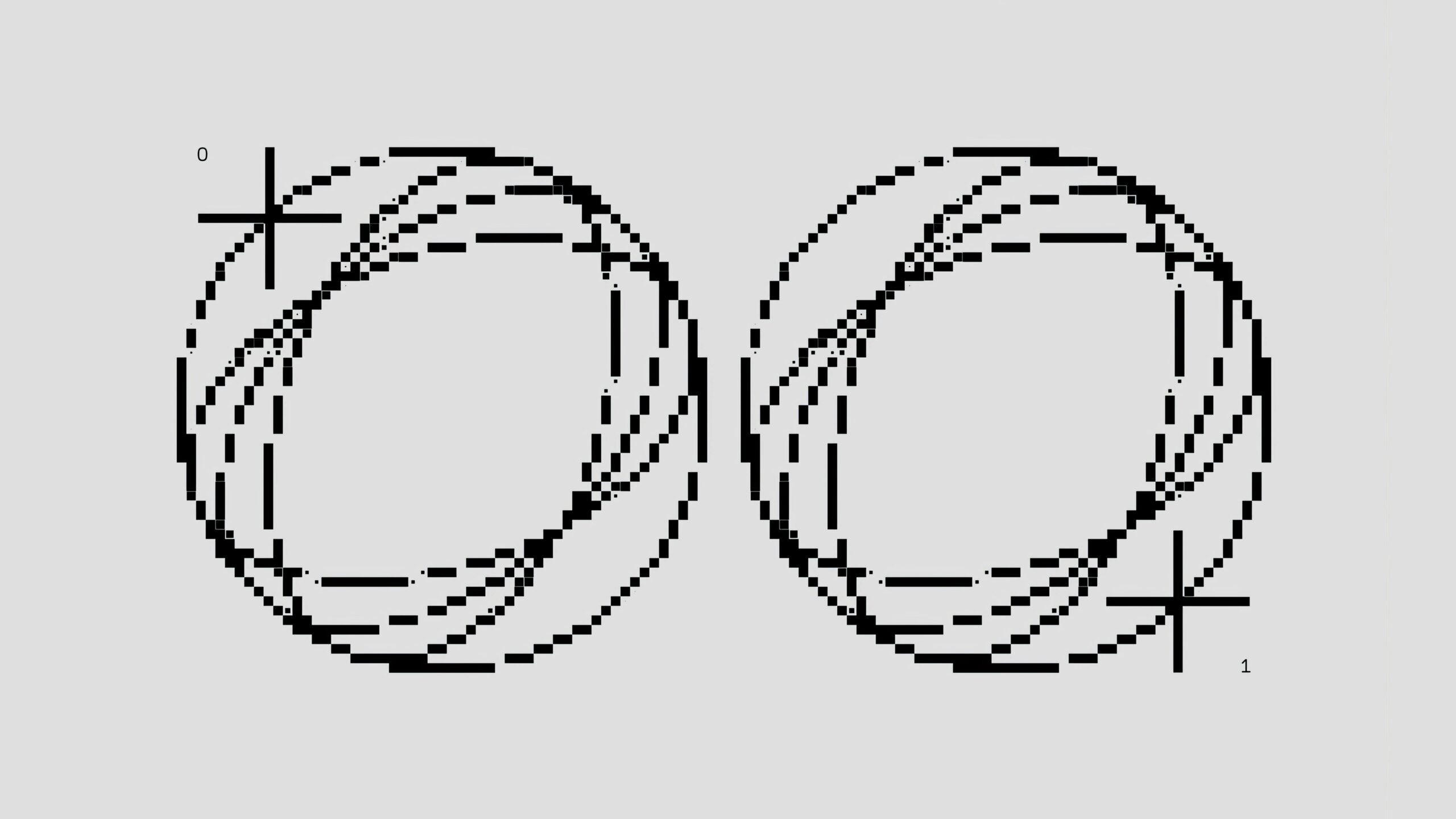

The takeaway: The required T/s depends on the job. For interactive work, aim for the “Interactive” range. Higher performance requires more powerful GPU hardware. For a visualization of what these different speeds would look like, visit https://tokens-per-second-visualizer.tiiny.site/ .

Your Budget: Investing in a Capability

An on-premise appliance is a one-time capital expense, an investment in a permanent business asset. Your budget will naturally guide which tier of performance and concurrency you can achieve.

- Explorer Budget (~€5k – €10k): This secures a powerful desktop-class appliance, perfect for a small team running 7B/13B models. This is often less than a team of 5 would spend on premium cloud AI subscriptions in a single year.

- Workhorse Budget (~€12k – €20k): This gets you a dedicated server built for departmental use. It has the VRAM and power to run large 70B models for dozens of concurrent users with great performance.

- Powerhouse Budget (€35k+): This is for businesses where AI is a core competitive advantage. These machines are built for maximum concurrency, the largest models, and even on-premise fine-tuning.

Putting It All Together: Finding Your Tier

By balancing these four factors, you can find the perfect fit for your needs.

| Appliance Tier | Ideal for (Users) | Ideal Model Size | Performance Profile | Budget Level |

| Explorer | 1-5 Concurrent Users | 7B – 13B Models | Excellent for one, interactive for a few | Explorer (€) |

| Workhorse | 5-50 Concurrent Users | 70B+ Models | Highly interactive for many users | Workhorse (€€) |

| Powerhouse | 50-250+ Users | Multiple Large Models | Instantaneous, high-throughput | Powerhouse (€€€) |

Choosing the right private AI solution doesn’t have to be complicated. It’s a logical process of matching your firm’s specific needs to the right tool. By getting this right, you invest in a capability that will serve you securely and cost-effectively for years to come.

Take Control of Your AI Future

Bringing your AI capabilities in-house isn’t just about technology; it’s a strategic business decision. It’s the definitive answer to the critical questions of security and customization. You get all the power of cutting-edge AI, with none of the risk.

Your AI, your data, your rules.